How to create a unified data lake with Tabular in 5 mins

With {AWS EMR, Starburst Trino} as Execution Engines on S3 using Tabular

This article will demonstrate how quickly and easily a transactional data lake can be built utilizing tools like Tabular, Spark (AWS EMR), Trino (Starburst), and AWS S3. This blog will show how seamless the interoperability across various computation engines is.

Here is a high-level view of what we would end up building –

Pre-requisites

Services required would be -

Tabular: You can sign up here and create an account.

Starburst: You can sign up here and create an account.

AWS EMR: We will be using EMR for Spark execution engine.

AWS S3 for storage.

Getting started!

Step 1: Step up the Tabular Warehouse

Create a Tabular warehouse (WarehouseForBlog) on your own S3 bucket by following this tutorial -

Smoke tests the created warehouse and see “WarehouseForBlog“ in the list of available warehouses -

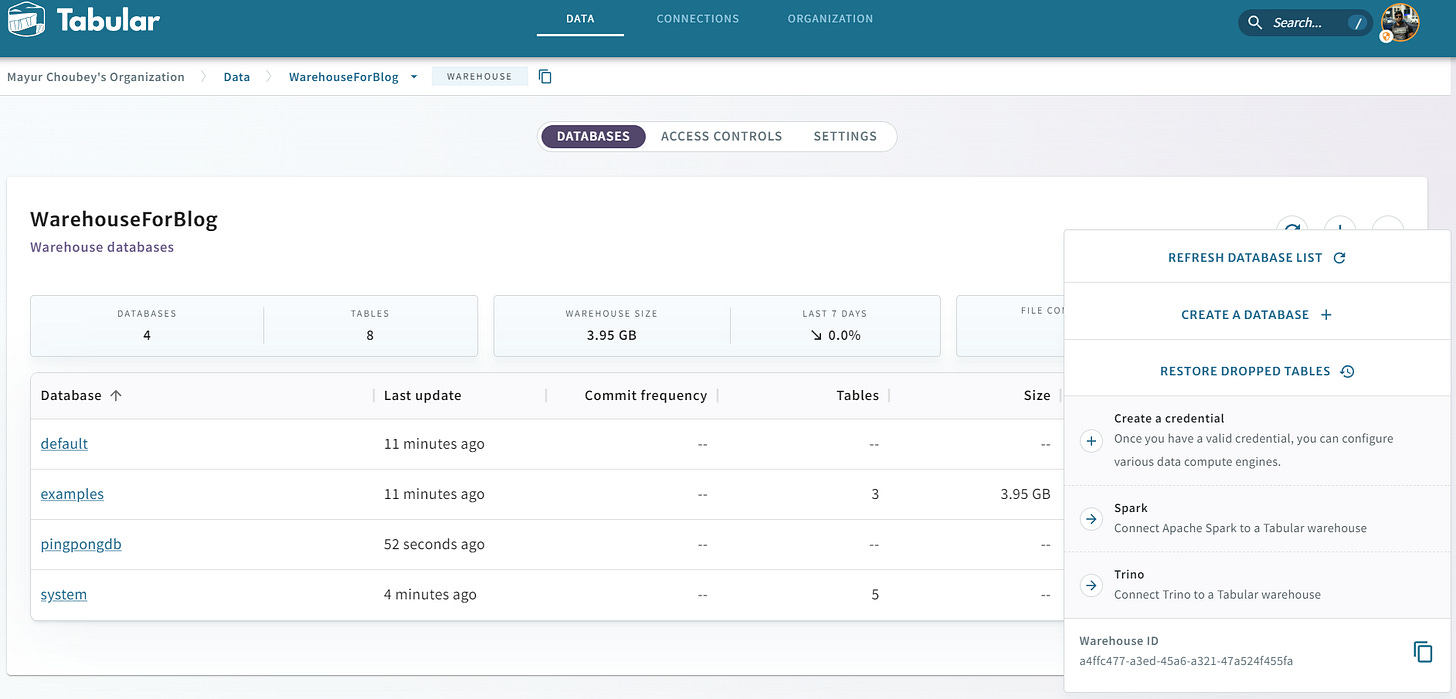

Drill down into warehouse to see available databases -

For the demonstration, we will create a new database “pingpongdb”

Finally, generate two credentials for Spark and Trino connectivity. Save these credentials, as they will be used in the following steps.

Step 2: Connet Starburst Trino with Tabular

Follow a few simple steps to establish a connection between Starburst and Tabular.

Once connection is established, we can see our warehouse “WarehouseForBlog” and database “pingpongdb” inside it. Please note, this database is still empty.

Step 3: Create an AWS EMR Spark cluster

Make sure to use old EMR console to create the cluster. New EMR console is buggy and doesn’t create functional cluster with Iceberg and Tabular jars.

Make sure to use the latest version of EMR. Currently its 6.10.x.

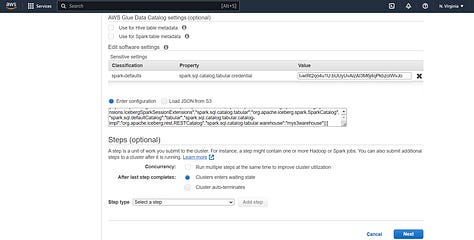

Make sure to configure Spark using below configurations.

[ { "classification":"spark-defaults", "properties":{ "spark.sql.extensions":"org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions", "spark.sql.defaultCatalog":"tabular", "spark.sql.catalog.tabular":"org.apache.iceberg.spark.SparkCatalog", "spark.sql.catalog.tabular.catalog-impl":"org.apache.iceberg.rest.RESTCatalog", "spark.sql.catalog.tabular.uri":"https://api.tabular.io/ws", "spark.sql.catalog.tabular.credential": "<your-tabular-credential>", "spark.sql.catalog.tabular.warehouse": "<your-warehouse-name>" } } ]

Make sure to create a bootstrap action and provide this shell script. This script will fetch Iceberg and Tabular jars from internet and deploy it in Spark/Libs folder.

s3://tabular-repository-public/aws/emr/bootstrap/tabular-runtime.sh

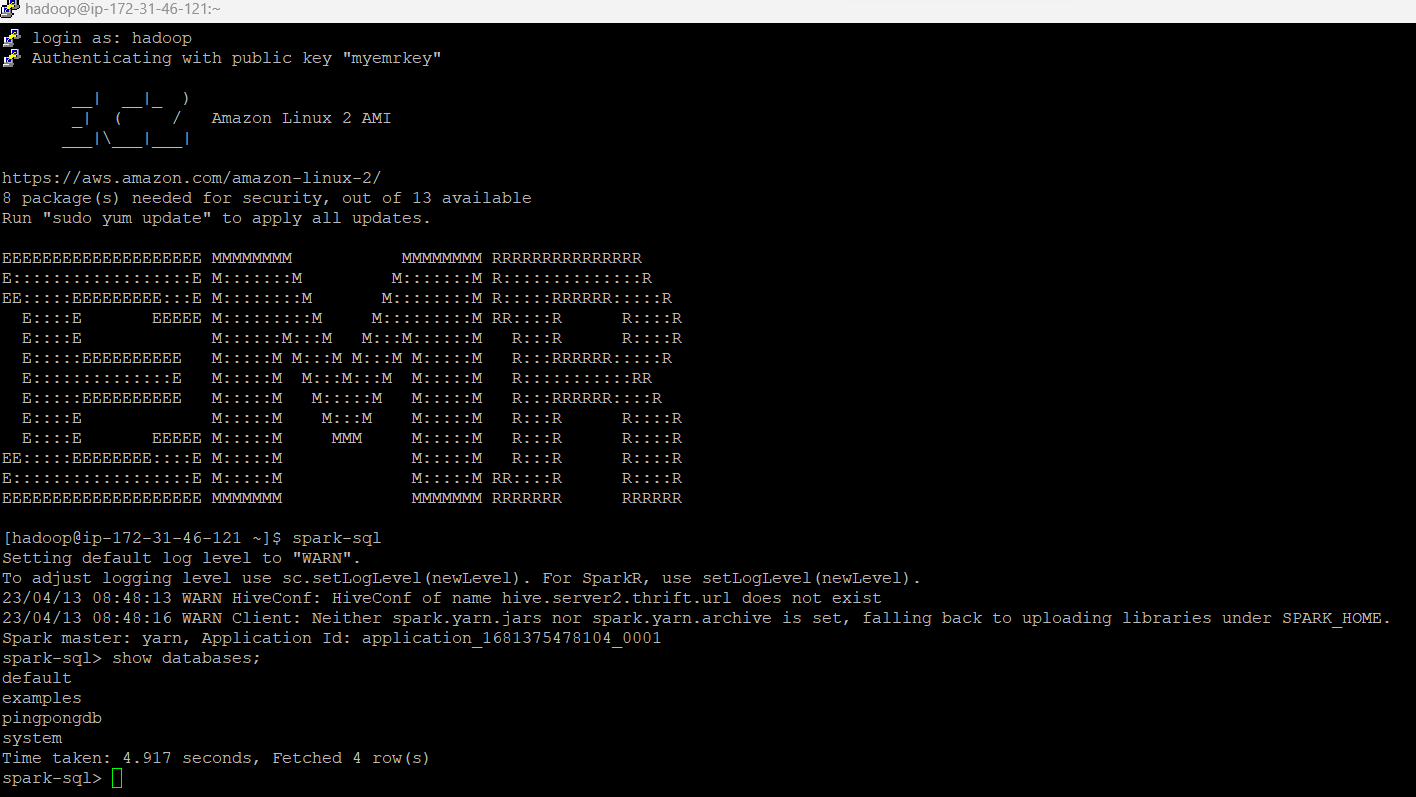

Smoke tests the EMR installation

As you can see, EMR is also connected with Tabular’s Catalog and can list the same databases in Spark shell.

Let’s play ping-pong!

This section will demonstrate how flexible it is to bring our own execution engine and do the analysis on top of the shared data lake.

For the sake of simplicity, we will create a new table from Spark and later will query it from Trino. This is to simulate an environment where Spark would be used as a tool for data ingestion and later use Trino for ad-hoc querying.

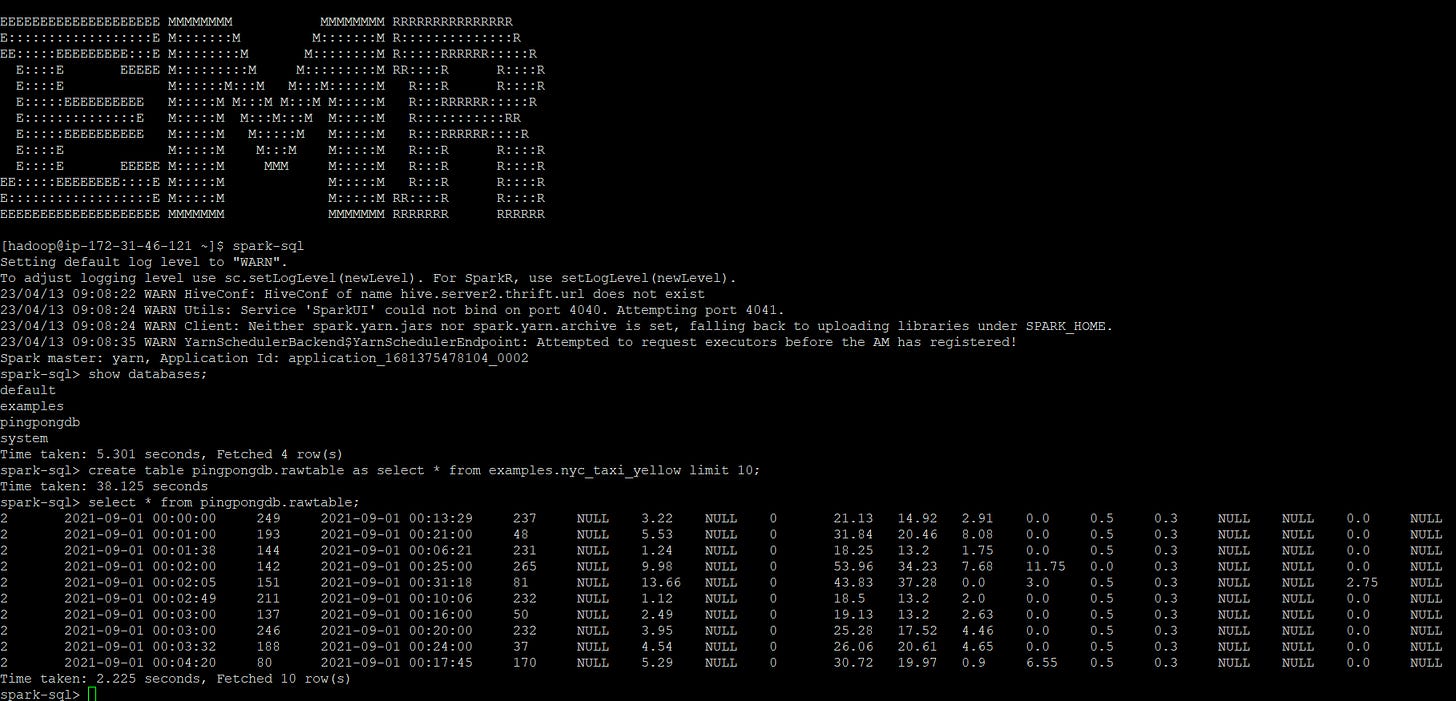

Launch the spark-sql shell again and execute below query -

create table pingpongdb.rawtable as select * from examples.nyc_tax_yellow limit 10

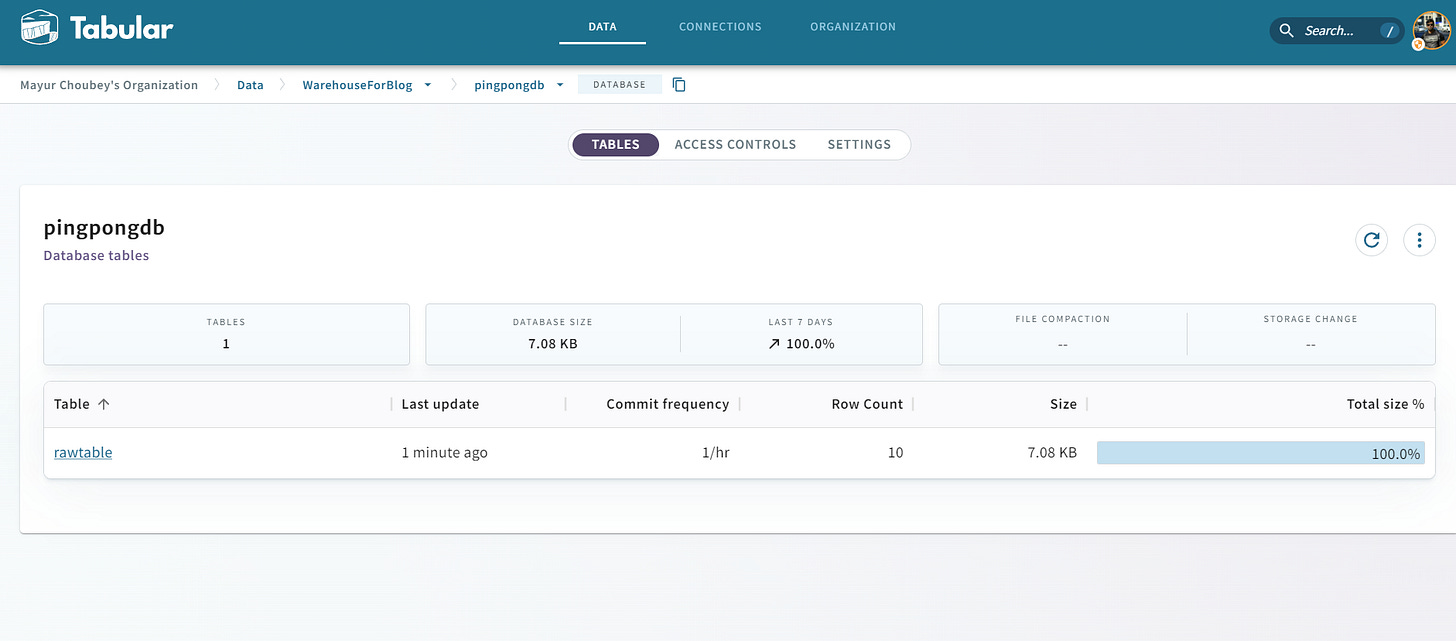

Now let’s check if the change is reflected on the tabular console or not.

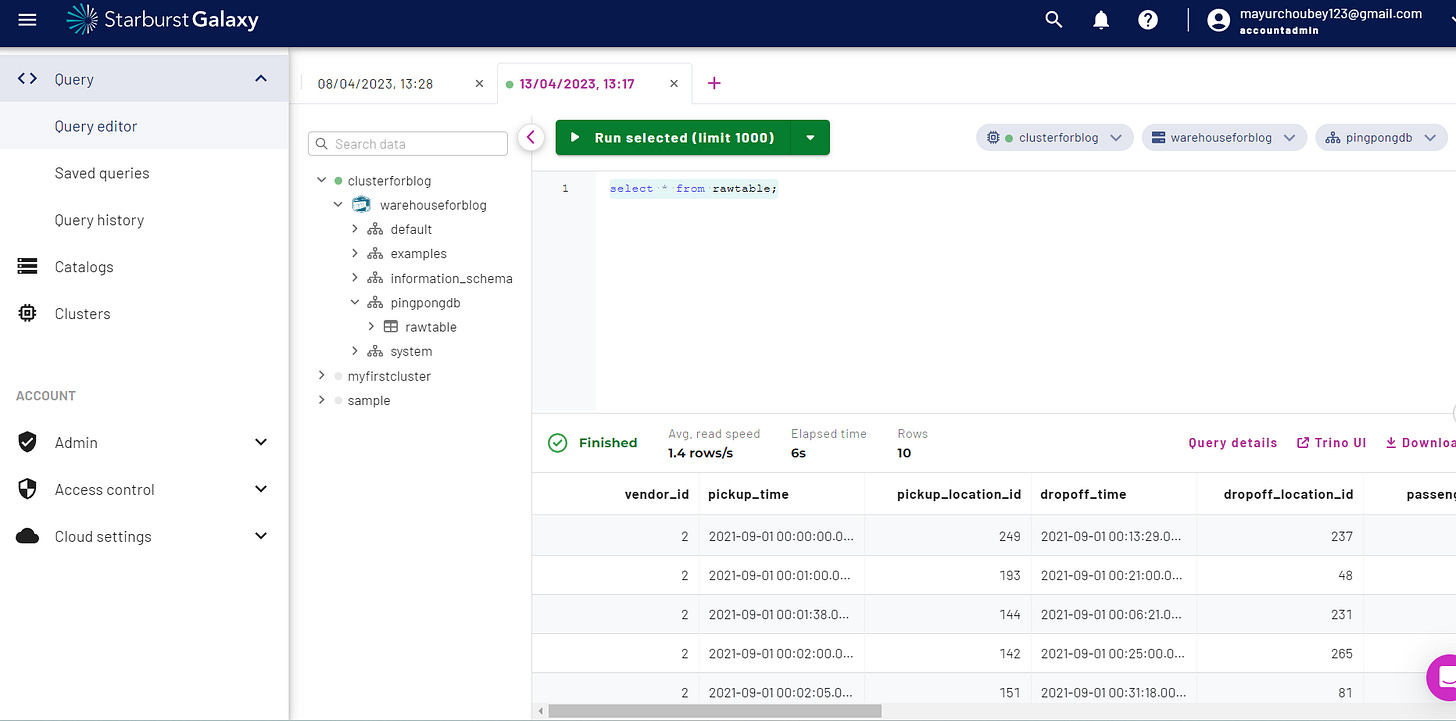

Finally, let’s go back to the Starburst console, explore the pingpongdb database, and verify if the newly created rawtable from Spark execution engine is visible or not. It should be!

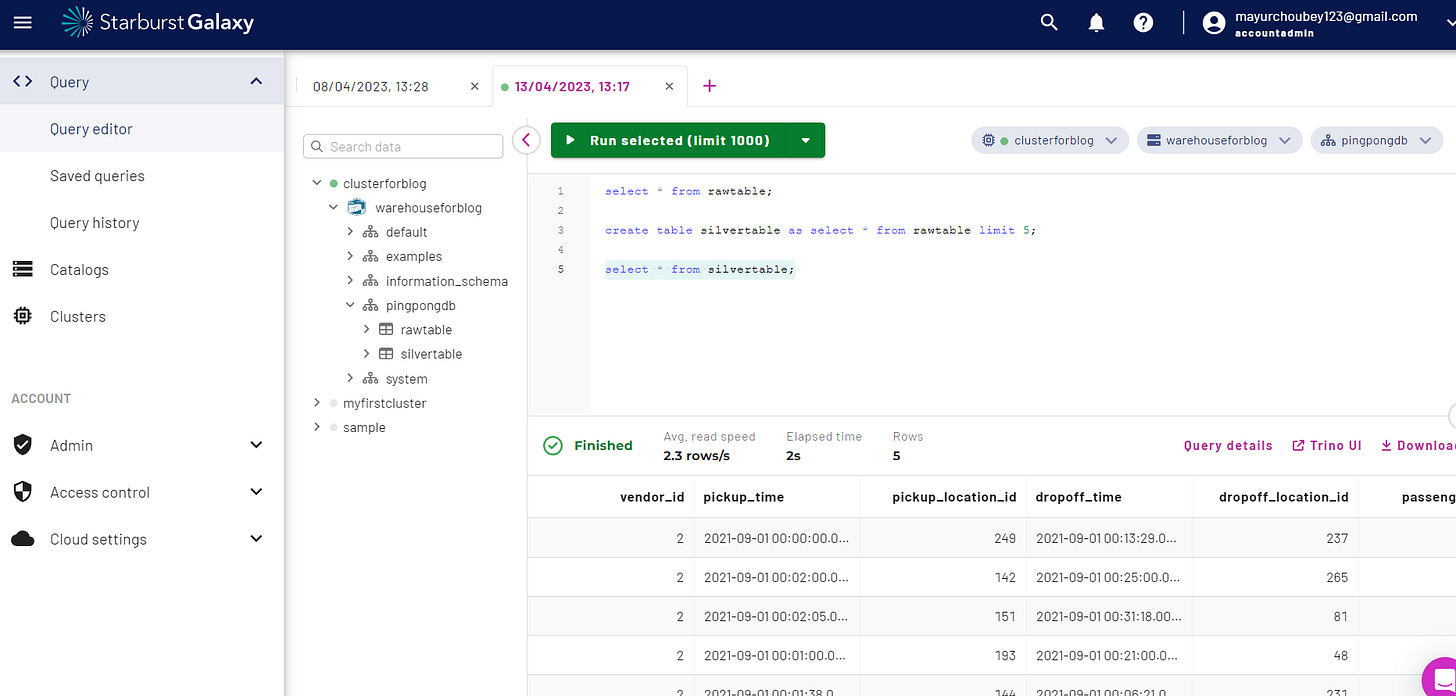

Further, we can check the other way around: Let’s create a silver table in Starburst Trino and then go back to Spark and check the state.

Summary

Using Tabular on top of tools like Apache Iceberg and S3, we can quickly set up a unified transactional data lake. We also created a simulation of a setting in which users may bring their own processing engines, such as Spark or Trino, to the unified data lake to process the data without the need for expensive ETL/ELT procedures.